This is a tale of a simple problem, with a relatively simple solution, that ended up being pretty complicated.

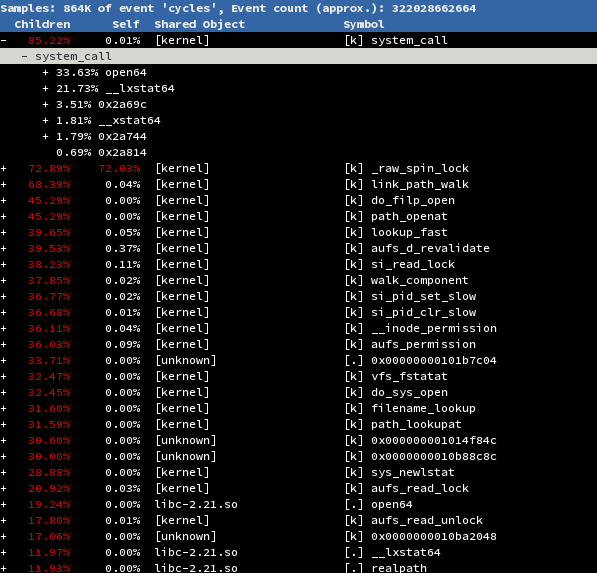

The BMC of OpenPOWER machines expose a serial console. It's pretty useful for getting information as the system is booting, or when it's having issues and the network is down. OpenPOWER machines also have runtime firmware, namely skiboot, which the Linux kernel calls to make certain things happen. One of those is writing to the serial console. There's a function that skiboot exposes, opal_poll_events() (which then calls opal_run_pollers()), which the kernel calls frequently. Among other things, it performs a partial flush of the serial console. And that all works fine...until the kernel panics.

Well, the kernel is in panic. Who cares if it flushes the console? It's dead. It doesn't need to do anything else.

Oh, right. It prints the reason it panicked. Turns out that's pretty useful.

There's a pretty simple fix here that we can push into the firmware. Most kernels are configured to reboot after panic, typically with some delay. In OpenPOWER, the kernel reboots by calling into skiboot with the opal_cec_reboot() function. So all we need to do is flush out the console buffer:

static int64 opal_cec_reboot(void)

{

printf("OPAL: Reboot request...\n");

console_complete_flush(); // <-- what I added

// rebooting stuff happens here...

return OPAL_SUCCESS;

}

Writing a complete flushing function was pretty easy, then call it from the power down and reboot functions. Easy, all nicely contained in firmware.

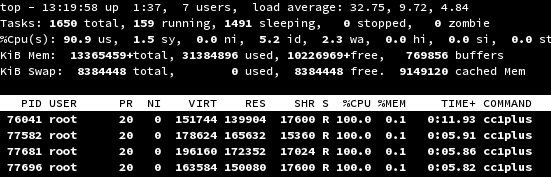

Now, what if the kernel isn't configured to reboot after panic. Or, what if the reboot timer is really long? Do you want to wait 3 minutes to see your panic output? Probably not. We need to call the pollers after panic.

First, I had to figure out what the kernel actually does when it panics. Let's have a look at the panic function itself to figure out where we could work some code in.

In the panic() function, the easiest place I found to put in some code was panic_blink(). This is supposed to be a function to blink the LEDs on your keyboard when the kernel is panicking, but we could set it to opal_poll_events() and it'd work fine. There, problem solved!

Oh, wait. That will never get accepted upstream, ever. Let's try again.

Well, there are #ifdefs in the code that are architecture specific, for s390 and SPARC. I could add an #ifdef to check if we're an OpenPOWER machine, and if so, run the pollers a bunch of times. That would also involve including architecture specific code from arch/powerpc, and that's somewhat gross. Maybe I could upstream this, but it'd be difficult. There must be a better way.

As a kernel noob, I found myself digging into what every function called by panic() actually did, to see if there's a way I could use it. I looked over it at first, but eventually I started looking harder at this line:

kmsg_dump(KMSG_DUMP_PANIC);

It turns out kmsg_dump() does what it says: dumps messages from the kernel. Different parts of the kernel can register their own dumpers, so the kernel can have a variety of dumpers for different purposes. One existing example in OpenPOWER is a kmsg dumper that stores messages in nvram (non-volatile RAM), so you can find it after you reboot.

Well, we don't really want to dump any output, it's already been sent to the output buffer. We just need to flush it. Pretty simple, just call opal_poll_events() a whole bunch of times, right? That would work, though it'd be nice to have a better way than just calling the pollers. Instead, we can add a new API call to skiboot specifically for console flushing, and call it from the kmsg dumper.

Initially, I wired up the skiboot complete console flushing function to a new OPAL API call, and called that from the kernel. After some feedback, this was refactored into a partial, incremental flush so it was more generic. I also had to consider what happened if the machine was running a newer kernel and an older skiboot, so if the skiboot version didn't have my new flushing call it would fall back to calling the pollers an arbitrary amount of times.

In the end, it looks like this:

/*

* Console output is controlled by OPAL firmware. The kernel regularly calls

* OPAL_POLL_EVENTS, which flushes some console output. In a panic state,

* however, the kernel no longer calls OPAL_POLL_EVENTS and the panic message

* may not be completely printed. This function does not actually dump the

* message, it just ensures that OPAL completely flushes the console buffer.

*/

static void force_opal_console_flush(struct kmsg_dumper *dumper,

enum kmsg_dump_reason reason)

{

int i;

int64_t ret;

/*

* Outside of a panic context the pollers will continue to run,

* so we don't need to do any special flushing.

*/

if (reason != KMSG_DUMP_PANIC)

return;

if (opal_check_token(OPAL_CONSOLE_FLUSH)) {

ret = opal_console_flush(0);

if (ret == OPAL_UNSUPPORTED || ret == OPAL_PARAMETER)

return;

/* Incrementally flush until there's nothing left */

while (opal_console_flush(0) != OPAL_SUCCESS);

} else {

/*

* If OPAL_CONSOLE_FLUSH is not implemented in the firmware,

* the console can still be flushed by calling the polling

* function enough times to flush the buffer. We don't know

* how much output still needs to be flushed, but we can be

* generous since the kernel is in panic and doesn't need

* to do much else.

*/

printk(KERN_NOTICE "opal: OPAL_CONSOLE_FLUSH missing.\n");

for (i = 0; i < 1024; i++) {

opal_poll_events(NULL);

}

}

}

You can find the full code in-tree here.

And thus, panic messages now roam free 'cross the countryside, causing developer frustration around the world. At least now they know why they're frustrated.