As a hobbyist programmer and Linux user, I was pretty stoked to be able to experience real work in the IT field that interests me most, Linux. With a mainly disconnected understanding of computer hardware and software, I braced myself to entirely relearn everything and anything I thought I knew. Furthermore, I worried that my usefulness in a world of maintainers, developers and testers would not be enough to provide any real contribution to the company. In actual fact however, the employees at OzLabs (IBM ADL) put a really great effort into making use of my existing skills, were attentive to my current knowledge and just filled in the gaps! The knowledge they've given me is practical, interlinked with hardware and provided me with the foot-up that I'd been itching for to establish my own portfolio as a programmer. I was both honoured and astonished by their dedication to helping me make a truly meaningful contribution!

On applying for the placement, I listed my skills and interests. Having a Mathematics, Science background, I listed among my greatest interests development of scientific simulation and graphics using libraries such as Python matplotlib and R. By the first day they got me to work, researching and implementing a routine in R that would qualitatively model the ability of a system to perform common tasks - a benchmark. A series of these microbenchmarks were made; I was in my element and actually able to contribute to a corporation much larger than I could imagine. The team at IBM reinforced my knowledge from the ground up, introducing the rigorous hardware and corporate elements at a level I was comfortable with.

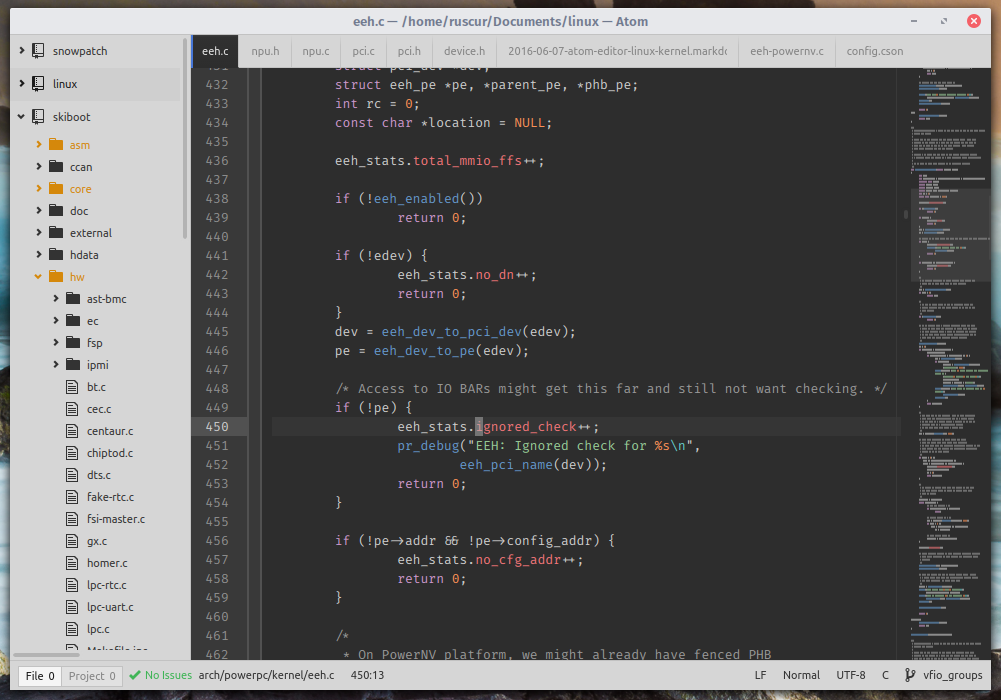

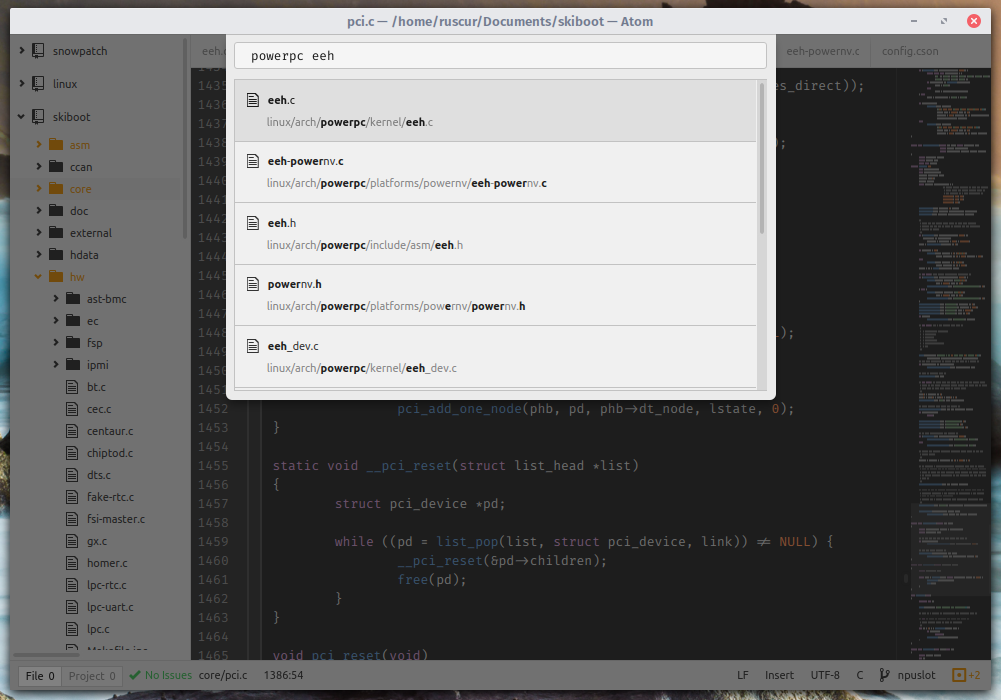

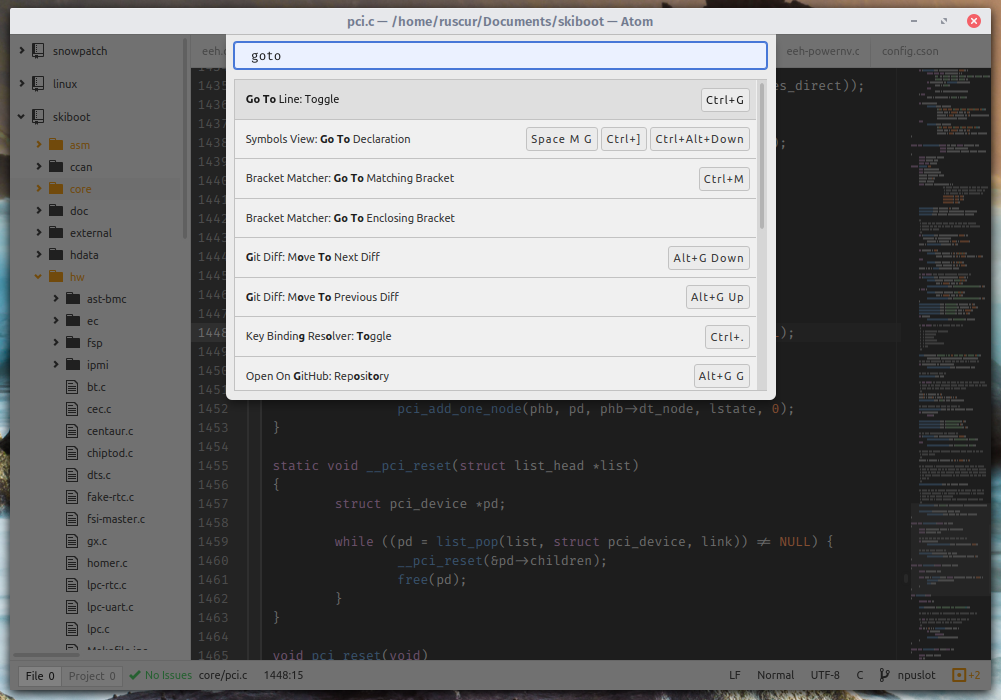

I would say that my greatest single piece of take-home knowledge over the two weeks was knowledge of the Linux Kernel project, Git and GitHub. Having met the arch/powerpc and linux-next maintainers in person placed the Linux and Open Source development cycle in an entirely new perspective. I was introduced to the world of GitHub, and thanks to a few rigorous lessons of Git, I now have access to tools that empower me to safely and efficiently write code, and to build a public portfolio I can be proud of. Most members of the office donated their time to instruct me on all fronts, whether to do with career paths, programming expertise or conceptual knowledge, and the rest were all very good for a chat.

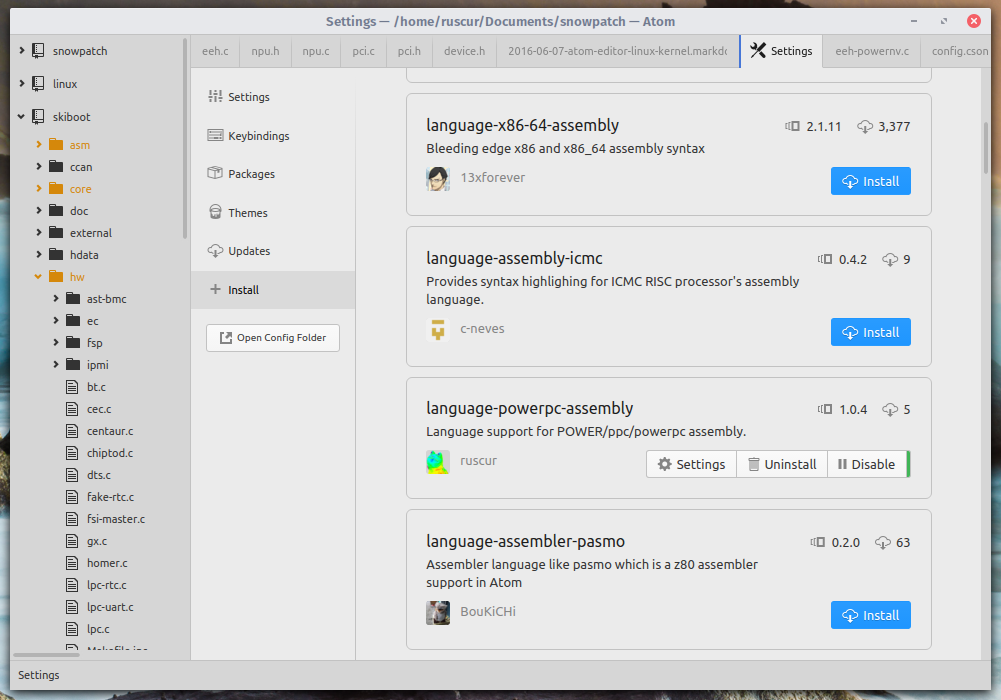

Approaching the tail-end of Year Twelve, I was blessed with some really good feedback and recommendations regarding further study. If during the two weeks I had any query regarding anything ranging from work-life to programming expertise even to which code editor I should use (a source of much contention) the people in the office were very happy to help me. Several employees donated their time to teach me really very intensive and long lessons regarding the software development concepts, including (but not limited to!) a thorough and helpful lesson on Git that was just on my level of understanding.

Working at IBM these past two weeks has not only bridged the gap between my hobby and my professional prospects, but more importantly established friendships with professionals in the field of Software Development. Without a doubt this really great experience of an environment that rewards my enthusiasm will fondly stay in my mind as I enter the next chapter of my life!